Extreme benchmark feedback using Gitlab CI

I am quite notorious for exploiting Gitlab’s CI. Ever since I started playing with it at the start of 2016, I tended to make things worse.

Currently, my website gets automatically built and deployed after git push.

When I alter my repository with chess annotations, my website pipeline gets triggered too,

which updates the chess page with an interactive board.

Gitlab CI is awesome. It uses Docker containers to run your stuff, and you can literally run arbitrary code in there. Gitlab also enables you to have secrets in variables, which can give you arbitrary access to servers. So, let’s have a look at what I’ve done now!

Benchmarking in Rust

Another thing I am known for is my — ehh — enthousiasm for Rust. While it does not really matter which language you are using, it’s what I use in this post.

Rust has an integrated benchmarking system,

and while that’s a really cool thing (it integrates with cargo, and that’s really nice),

criterion.rs offers way more bang for the buck (and integrates all the same).

Criterion automatically statistically compares your test with the previous run,

giving immediate feedback on whether an optimization was a mistake or not.

It also produces HTML reports and relevant plots using gnuplot.

Benchmarking merge requests

That’s cool if I am running those benchmarks for my own, and optimizing stuff. Where this really would shine in is the extreme feedback world, or at least in the regular merge request feedback world.

GitHub as these integrations with external CI services, where they provide pull requests with “checks” and some words, remember? Turns out Gitlab provides the same feature, under the name Commit API.

So the idea is to benchmark a merge request against the master branch, and then use the Commit API to provide feedback in the merge request.

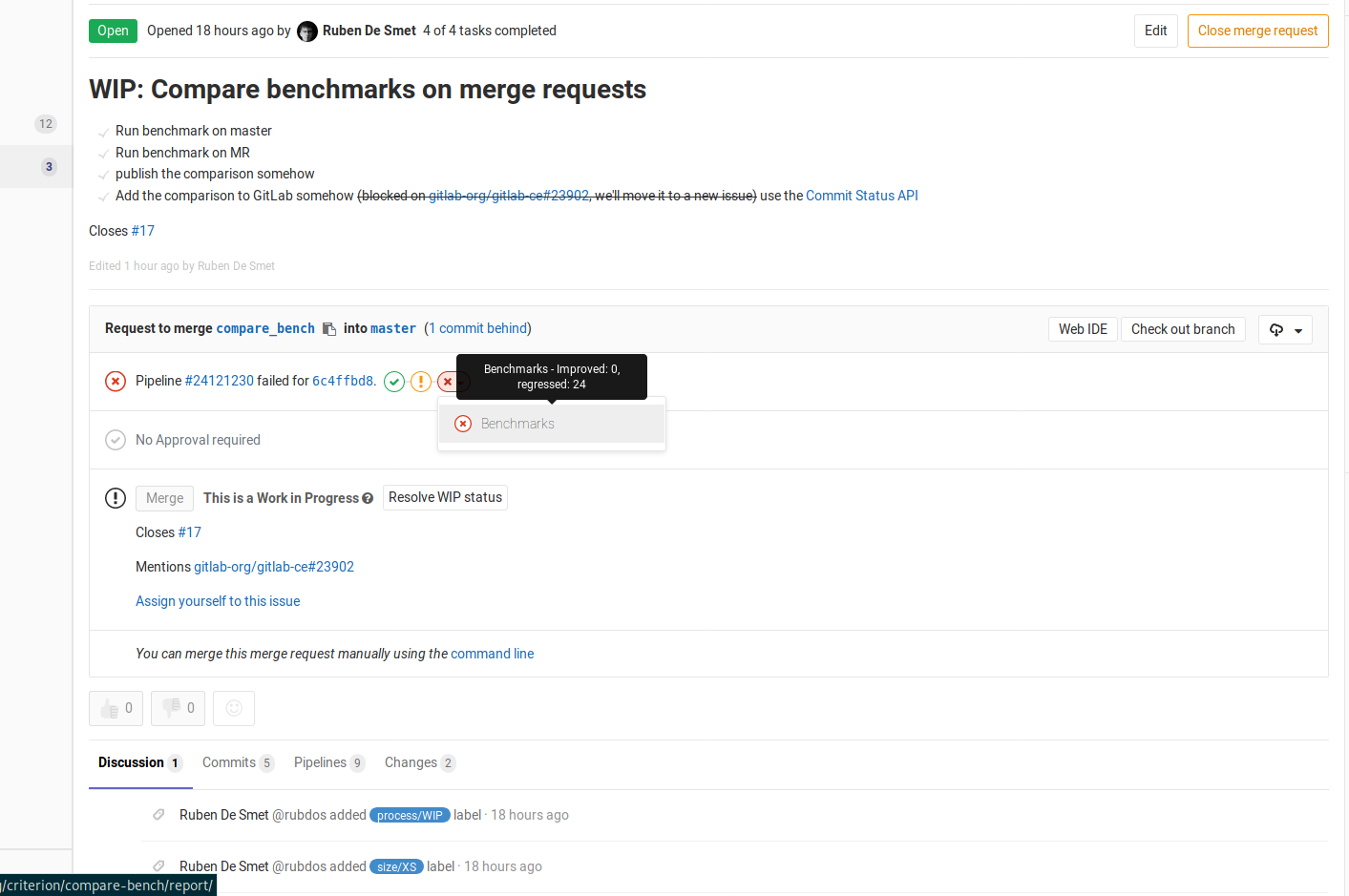

And the above is how it looks.

There is an additional item in the pipeline, which when hovered shows the amount of improvements and regressions, and when clicked (look at the URL below in the image), gets you to the Criterion report pages.

Mind that all of this is without the use of an external service.

It’s just a shell script that uses jq to parse Criterions JSON outputs for every pair of tests, and curl to dump in on the Gitlab API.

This way, every merge request I do gets a nice checkmark if the performance is better of stayed the same (within a noise threshold), or a red cross like in the image when I messed something up.

I’ll leave the code on a Gitlab repository. Merge requests are always welcome, and I’ll probably update it as I go my way.